photo by Roger Cozien

I sat down with Tim Kohler, the creator of the Village Ecodynamics Project agent-based model, professor of anthropology at Washington State University, researcher at Crow Canyon Archaeological Center, and external faculty at the Santa Fe Institute, to discuss his philosophy on complexity science and archaeology, and get some tips for going forward studying complex systems.

How did you get introduced to complexity science:

I took a sabbatical in the mid-1990s and was fortunate to be able to do it at the Santa Fe Institute. Being there right when Chris Langton was developing Swarm, and just looking over his shoulder while he was developing it, was highly influential; Swarm was the original language that we programmed the Village Ecodynamics Project in. Having the opportunity to interact with scientists of many different types at the Santa Fe Institute (founded in 1984) was a wonderful opportunity. This was not an opportunity available to many archaeologists, so one of the burdens I bear, which is honestly a joyful burden, is that having had that opportunity I need to promulgate that to others who weren’t so lucky. This really was my motive for writing Complex Systems and Archaeology in “Archaeological Theory Today” (second edition).

What complexity tools do you use and how?

I primarily use agent-based modeling, although in Complex Systems and Archaeology I recognize the values of the many other tools available. But I’d point out that I do an awful lot of work that is traditional archaeology too. I recently submitted an article that attempts to look at household-level inequality from the Dolores Archaeological Project data, and this is traditional archaeological inquiry. I do these studies because I think that they contribute in an important way to understanding whether or not an exercise in a structure like the development of leadership model, gives us a sensible answer. This feeds in to traditional archaeology.

In 2014 I published an article calculating levels of violence in the American Southwest. This is traditional archaeology, although it does use elements of complexity. I can’t think of other instances where archaeologists have tried to analyze trajectories of things through time in a phase-space like I did there. The other thing that I do that is kind of unusual in archaeology (not just complexity archaeology) is that I have spent a lot of time and effort trying to estimate how much production you can get off of landscapes. Those things have not really been an end in themselves, although they could be seen as such. However, I approached trying to estimate the potential production of landscapes so that it could feed into the agent-based models. Thus these exercises contribute to complex systems approaches.

What do you think is the unique contribution that complexity science has for archaeology?

I got interested in complexity approaches in early to mid 1990s; during that time when you look around the theoretical landscape there were two competing approaches on offer in archaeology: 1) Processualism (the new archaeology), and the reaction to processualism, 2) Post-processualism, which came from the post-modern critique.

First, with processualism. There has been a great deal of interesting and useful work done through that framework, but if you look at some of that work it really left things lacking. An article that really influenced my feelings on that approach was Feinman’s, famous article “Too Many Types: An Overview of Sedentary Prestate Societies in the Americas” from Advances in Archaeological Method and Theory (1984). He does a nice analysis in the currency of variables having to do with maximal community size, comparison of administrative levels, leadership functions, etc. I would argue that these variables are always a sort of abstraction on the point of view of the analyst. And people, as they are living their daily lives, are not aware of channeling their actions along specific dimensions that can be extracted along variables; people act, they don’t make variables, they act! It’s only through secondary inference that some outcome of their actions (and in fact those of many others) can be distilled as a ‘variable.’ My main objection to processualism is that everything is a variable, and more often these variables are distilled at a very high level abstraction for analysis. Leadership functions, the number of administrative levels… but there’s never a sense in processual archaeology (in my view) for how it is through people’s actions that these variables emerge and these high levels came to be. I thought this was a major flaw in processualism

If you look at post-processulism, at its worst people like Tilley and Shanks in the early 1990s, you have this view of agency… People are acting almost without structures. There’s no predictability to their actions. No sense of optimality or adaptation that structure their actions. Although I would admit that these positions did have the effect of exposing some of the weaknesses in processual archaeology, they didn’t offer a positive program to make a path going forward to understand prehistory.

I thought what was needed was a way to think about the archaeological record as being composed of the actions of agents, while giving the proper role to these sorts of structures that these agents had to operate within (people within societies). I also thought that a proper role needed to be given to concepts like evolution and adaptation that were out the window for the early post-processualists. That is what complexity in archaeology tries to achieve. A complex-adaptive system approach honors actions of individuals but also honors that agents have clear goals that provide predictability to their actions, and that these take place within structures, such as landscapes or ecosystems or cities, that structure these in relatively predictable ways.

How does complexity help you understand your system of interest?

Complexity approaches give us the possibility to examine how high-level outcomes emerge from the outcomes of agent-landscape interaction and agent-agent interaction. These approaches to a great measure satisfy the weaknesses of those the two main approaches from 90s (processualism and post-processualism). So we have both high level outcomes (processualism) and agent level actions (post-processualism) but complexity provides a bridge between these two.

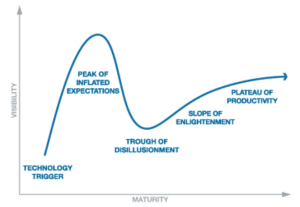

What are the barriers we need to break to make complexity science a main-stream part of archaeology?

Obviously barriers need to be broken. Early on, although this is not the case as much any more, many students swallowed the post-processual bait hook, line and sinker, which made it so they wouldn’t be very friendly to complexity approaches. They were, in a sense, blinded by theoretical prejudices. This is much less true now, and becomes less true each year. The biggest barrier now to entry is the fact that very few faculty are proficient in the tools of complex adaptive systems in archaeology, such as agent based modeling, scaling studies, and faculty even are not proficient with posthoc analyses in tools like R that make sense of what’s going on in these complex systems. Until we get a cadre of faculty who are fluent in these approaches this will be a main barrier.

Right now the students are leading the way in complex adaptive systems studies in archaeology. In a way, this is similar to how processual archaeology started—it was the students who led the way then too. Students are leading the way right now, and as they become faculty it will be enormously useful for the spread of those tools. So all of these students need to get jobs to be able to advance archaeology, and that is a barrier.

Do you think that archaeology has something that can uniquely contribute to complexity science (and what is it)?

I would make a strong division between complex adaptive systems (anything that includes biological and cultural agents) and complex nonadaptive systems (spin glasses, etc.) where there is no sense that there is some kind of learning or adaptation. Physical systems are structured by optimality but there is no learning or adaptation.

The one thing that archaeologists have to offer that is unique is the really great time depth that we always are attempting to cope with in archaeology.

The big tradeoff with archaeology is that, along with deep time depth, we have very poor resolution for the societies that we are attempting to study. But this gives us a chance to develop tools and methods that work with complex adaptive systems specifically within social systems; this, of course, is not unique to archaeology, as it is true for economists, biologists, and economists

What do you think are the major limitations of complexity theory?

I don’t think complexity approaches, so far at least, have had much to say about the central construct for anthropology—culture. Agent-based models, for example, and social network analysis are much more attuned to behavior than to culture. They have not, so far, tried to use these tools to try to understand culture change as opposed to behavioral change. It’s an outstanding problem. And this has got to be addressed if the concept of culture remains central to anthropology (which, by definition, it will). Unless complexity can usefully address what culture is and how it changes, complexity will always be peripheral. Strides have been made in that direction, but the citadel hasn’t been taken.

Does applying complexity theory to a real world system (like archaeology) help alleviate the limitations of complexity and make it more easily understandable?

Many people who aren’t very interested in science are really interested in archaeology. So I think archaeology offers a unique possibility for science generally, and complexity specifically, by being applied to understanding something that people are intrinsically interested in, even if they aren’t interested in other applications of same tools to other problems. It’s non-threatening. You can be liberal or conservative and you can be equally interested in what happened to the Ancestral Puebloans; you might have predilection to one answer or another, but you are still generally interested. But these things are non-threatening in an interesting way. They provide a showcase for these powerful tools that might be more threatening if they were used in an immediate fashion.

What do you recommend your graduate students start on when they start studying complexity?

Dynamics in Human and Primate Societies by Kohler and Gummerman is a useful starting point

I am a big enthusiast for many works that John Holland wrote

Complexity: A Guided Tour by Melanie Mitchell’s is a great volume

I learned an enormous amount by a close reading of Stu Kauffman’s “Origins of Order.” I read this during my first sabbatical at SFI, and if you were to look at the copy you’d see all sorts of marginal annotations in that. We don’t see him cited much nowadays, but he did make important contributions to understanding complex systems.

In terms of technology or classes, the most important things would be for them to get analytical and modeling tools as soon as they could and as early as they can. In the case of Washington State University, taking agent-based modeling course and taking the R and Big Data course would be essential. But to be a good archaeologist you need a good grounding in method and theory, so taking courses that fulfill that as early on as possible is essential.

And a final question…

What are two current papers/books/talks that influence your recent work?

I’m always very influenced by the work of my students. One of my favorites is the 2014 Bocinsky and Kohler article in Nature Communications. Another is upcoming foodwebs work from one of my other students. These papers are illustrative of the powers of complexity approaches. Bocinsky’s article is not in and of itself a contribution to complex adaptive systems in archaeology, except that it is in the spirit of starting off from a disaggregated entity (cells on a landscape) and ending up with a production sequence emerging from that for the system as a whole. It shows how we can get high-level trends that can be summarized by amounts within the maize niche. So it deals, in a funny way, with the processes of emergence. It’s a prerequisite for doing the agent-based modeling work.

Some recent works by Tim Kohler

2014 (first author, with Scott G. Ortman, Katie E. Grundtisch, Carly M. Fitzpatrick, and Sarah M. Cole) The Better Angels of Their Nature: Declining Violence Through Time among Prehispanic Farmers of the Pueblo Southwest. American Antiquity 79(3): 444–464.

2014 (first author, with Kelsey M. Reese) A Long and Spatially Variable Neolithic Demographic Transition in the North American Southwest. PNAS (early edition).

2013 How the Pueblos got their Sprachbund. Journal of Archaeological Method and Theory 20:212-234.

2012 (first author, with Denton Cockburn, Paul L. Hooper, R. Kyle Bocinsky, and Ziad Kobti) The Coevolution of Group Size and Leadership: An Agent-Based Public Goods Model for Prehispanic Pueblo Societies. Advances in Complex Systems 15(1&2):1150007.

15(1&2):1150007.

2012 (first editor, with Mark D. Varien) Emergence and Collapse of Early Villages: Models of Central Mesa Verde Archaeology. University of California Press, Berkeley