People love power-laws. In the 90s and early 2000s it seemed like they were found everywhere. Yet early power-law studies did not subject the data distributions to rigorous tests. This decreased the potential value of some of these studies. And since an influential study by Aaron Clauset of CU Boulder , Cosma Shalizi of Carnegie Mellon, and Mark Newman of the University of Michigan, researchers have become aware that not all distributions that look power-law like are actually power-laws.

But power-law analyses can be incredibly useful. In this post I show you first what a power-law is, second demonstrate an appropriate case-study to use these analyses in, and third walk you through how to use these analyses to understand distributions in your data.

What is a power-law?

A power-law describes a distribution of something—wealth, connections in a network, sizes of cities—that follow what is known as the law of preferential attachment. In power-laws there will be many of the smallest object, with increasingly fewer of the larger objects. However, the largest objects disproportionally get the highest quantities of stuff.

The world wide web follows a power-law. Many sites (like Simulating Complexity) get small amounts of traffic, but some sites (like Google, for example) get high amounts of traffic. Then, because they get more traffic, they attract even more visits to their sites. Cities also tend to follow power-law distributions, with many small towns, and few very large cities. But those large cities seem to keep getting larger. Austin, TX for example, has 157.2 new citizens per day, making this city the fastest growing city in the United States. People are attracted to it because people keep moving there, which perpetuates the growth. Theoretically there should be a limit, though maybe the limit will be turning our planet into a Texas-themed Coruscant.

This is in direct contrast to log-normal distributions. Log-normal distributions follow the law of proportional effect. This means that as something increases in size, it is predictably larger than what came before it. Larger things in log-normal distributions do not attract exponentially more things… they have a proportional amount of what came before. For example, experience and income should follow a log-normal distribution. As someone works in a job longer they should get promotions that reflect their experience. When we look at incomes of all people in a region we see that when incomes are more log-normally distributed these reflect greater equality, whereas when incomes are more power-law-like, inequality increases. Modern incomes seem to follow log-normality up to a point, after which they follow a power-law, showing that the richest attract that much more wealth, but under a certain threshold wealth is predictable.

If we analyze the distribution of modern incomes in a developing nation and see that they follow a power-law distribution, we will understand that there is a ‘rich get richer’ dynamic in that country, whereas if we see the incomes follow a log-normal distribution we would understand that that country had greater internal equality. We might want to know this to help influence policy.

When we analyze power-laws, however, we don’t want to just look at the graph that is created and say “Yeah, I think that looks like a power-law.” Early studies seemed to do just that. Thankfully Clauset et al. came up with rigorous methods to examine a distribution of data and see if it’s a power-law, or if it follows another distribution (such as log-normal). Below I show how to use these tools in R.

Power-law analyses and archaeology

So, if modern analyses of these distributions can tell us something about the equality (log-normal) or inequality (power-law) of a population, then these tools can be useful for examining the lifeways of past people. Questions we might be interested in asking are whether prehistoric cities also follow a power-law distribution, suggesting that the largest cities offered more social (and potentially economic) benefits similar to modern cities. Or we might want to understand whether societies in prehistory were more egalitarian or more hierarchical, thus looking at distributions of income and wealth (as archaeologists define them) to examine these. Power-law analyses of distributions of artifacts or settlement sizes would enable us to understand the development of inequality in the past.

Clifford Brown et al. talked about these very issues in their chapter Poor Mayapan from the book The Ancient Maya of Mexico edited by Braswell. While they don’t use the statistical tools I present below, they do present good arguments for why and when power-law versus other types of distributions would occur, and I would recommend tracking down this book and reading it if you’re interested in using power-law analyses in archaeology. Specifically they suggest that power-law distributions would not occur randomly, so there is intentionality behind those power-law-like distributions.

I recently used power-law and log-normal analyses to try to understand the development of hierarchy in the American Southwest. The results of this study will be published in 2017 in American Antiquity. Briefly, I wanted to look at multiple types of evidence, including ceremonial structures, settlements, and simulation data to understand the mechanisms that could have led to hierarchy and whether or not (and when) Ancestral Pueblo groups were more egalitarian or more hierarchical. Since I was comparing multiple different datasets, a method to quantitatively compare them was needed. Thus I turned to Clauset’s methods.

These had been updated by Gillespie in the R package poweRlaw.

Below I will go over the poweRlaw package with a built-in dataset, the Moby Dick words dataset. This dataset counts the frequency of different words. For example, there are many instances of the word “the” (19815, to be exact) but very few instances of other words, like “lamp” (34 occurrences) or “choice” (5 occurrences), or “exquisite” (1 occurrence). (Side note, I randomly guessed at each of these words, assuming each would have fewer occurrences. My friend Simon DeDeo tells me that ‘exquisite’ in this case is hapax legomenon, or a term that only has one recorded use. Thanks Simon.) To see more go to http://roadtolarissa.com/whalewords/.

In my research I used other datasets that measured physical things (the size of roomblocks, kivas, and territories) so there’s a small mental leap for using a new dataset, but this should allow you to follow along.

The Tutorial

Open R.

Load the poweRlaw package

library(“poweRlaw”)

Add in the data

data(“moby”, package=”poweRlaw”)

This will load the data into your R session.

Side note:

If you are loading in your own data, you first load it in like you normally would, e.g.:

data <- read.csv(“data.csv”)

Then if you were subsetting your data you’d do something like this:

a <- subset(data, Temporal_Assignment !=’Pueblo III (A.D. 1140-1300)’)

Next you have to decide if your data is discrete or continuous. What do I mean by this?

Discrete data can only take on particular values. In the case of the Moby Dick dataset, since we are counting physical words, this data is discrete. You can have 1 occurrence of exquisite and 34 occurrences of lamp. You can’t have 34.79 occurrences of it—it either exists or it doesn’t.

Continuous data is something that doesn’t fit into simple entities, but whose measurement can exist on a long spectrum. Height, for example, is continuous. Even if we bin peoples’ heights into neat categories (e.g., 6 feet tall, or 1.83 meters) the person’s height probably has some tailing digit, so they aren’t exactly 6 feet, but maybe 6.000127 feet tall. If we are being precise in our measurements, that would be continuous data.

The data I used in my article on kiva, settlement, and territory sizes was continuous. This Moby Dick data is discrete.

The reason this matters is the poweRlaw package has two separate functions for continuous versus discrete data. These are:

conpl for continuous data, and

displ for discrete data

You can technically use either function and you won’t get an error from R, but the results will differ slightly, so it’s important to know which type of data you are using.

In the tutorial written here I will be using the displ function since the Moby dataset is discrete. Substitute in conpl for any continuous data.

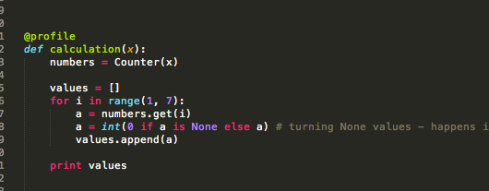

So, to create the powerlaw object first we fit the displ to it. So,

pl_a <- displ$new(moby)

We then want to estimate the x-min value. Powerlaws are usually only power-law-like in their tails… the early part of the distribution is much more variable, so we find a minimum value below which we say “computer, just ignore that stuff.”

However, first I like to look at what the x_min values are, just to see that the code is working. So:

pl_a$getXmin()

Then we estimate and set the x-mins

So this is the code that does that:

est <- estimate_xmin(a)

We then update the power-law object with the new x-min value:

pl_a$setXmin(est)

We do a similar thing to estimate the exponent α of the power law. This function is pars, so:

Pl_a$getPars()

estimate_pars(pl_a)

Then we also want to know how likely our data fits a power law. For this we estimate a p-value (explained in Clauset et al). Here is the code to do that (and output those data):

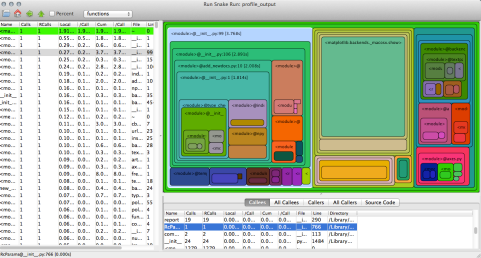

booty <- bootstrap_p(pl_a)

This will take a little while, so sit back and drink a cup of coffee while R chunks for you.

Then look at the output:

booty

Alright, we don’t need the whole sim, but it’s good to have the goodness of fit (gof: 0.00825) and p value (p: 0.75), so this code below records those for you.

variables <- c(“p”, “gof”)

bootyout <- booty[variables]

write.table(bootyout, file=”/Volumes/file.csv”, sep=’,’, append=F, row.names=FALSE, col.names=TRUE)

Next, we need to see if our data better fits a log-normal distribution. Here we compare our dataset to a log-normal distribution, and then compare the p-values and perform a goodness-of-fit test. If you have continuous data you’d use conlnorm for a continuous log normal distribution. Since we are using discrete data with the Moby dataset we use the function dislnorm. Again, just make sure you know which type of data you’re using.

### Estimating a log normal fit

aa <- dislnorm$new(moby)

We then set the xmin in the log-normal dataset so that the two distributions are comparable.

aa$setXmin(pl_a$getXmin())

Then we estimate the slope as above

est2 <-estimate_pars(aa)

aa$setPars(est2$pars)

Now we compare our two distributions. Please note that it matters which order you put these in. Here I have the power-law value first with the log-normal value second. I discuss what ramifications this has below.

comp <- compare_distributions(pl_a, aa)

Then we actually print out the stats:

comp

And then I create a printable dataset that we can then look at later.

myvars <- c(“test_statistic”, “p_one_sided”, “p_two_sided”)

compout <- comp[myvars]

write.table(compout, file=”/Volumes/file2.csv”, sep=’,’, append=F, row.names=FALSE, col.names=TRUE)

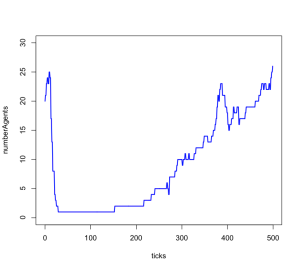

And now all we have left to do is graph it!

pdf(file=paste(‘/Volumes/Power_Law.pdf’, sep=”),width=5.44, height = 3.5, bg=”white”, paper=”special”, family=”Helvetica”, pointsize=8)

par(mar=c(4.1,4.5,0.5,1.2))

par(oma=c(0,0,0,0))

plot(pts_a, col=’black’, log=’xy’, xlab=”, ylab=”, xlim=c(1,400), ylim=c(0.01,1))

lines(pl_a, col=2, lty=3, lwd=2, xlab=”, ylab=”)

lines(aa, col=3, lty=2, lwd=1)

legend(“bottomleft”, cex=1, xpd=T, ncol=1, lty=c(3,2), col=c(2,3), legend=c(“powerlaw fit”, “log normal fit”), lwd=1, yjust=0.5,xjust=0.5, bty=”n”)

text(x=70,y= 1,cex=1, pos=4, labels=paste(“Power law p-value: “,bootyout$p))

mtext(“All regions, Size”, side=1, line=3, cex=1.2)

mtext(“Relative frequencies”, side=2, line=3.2, cex=1.2)

legend=c(“powerlaw fit”, “log normal fit”)

box()

dev.off()

Now, how do you actually tell which is better, the log normal or power-law? Here is how I describe it in my upcoming article:

The alpha parameter reports the slope of the best-fit power-law line. The power-law probability reports the probability that the empirical data could have been generated by a power law; the closer that statistic is to 1, the more likely that is. We consider values below 0.1 as rejecting the hypothesis that the distribution was generated by a power law (Clauset et al. 2009:16). The test statistic indicates how closely the empirical data match the log normal. Negative values indicate log-normal distributions, and the higher the absolute value, the more confident the interpretation. However, it is possible to have a test statistic that indicates a log-normal distribution in addition to a power-law probability that indicates a power-law, so we employ the compare distributions test to compare the fit of the distribution to a power-law and to the log-normal distribution. Values below 0.4 indicate a better fit to the log-normal; those above 0.6 favor a power-law; intermediate values are ambiguous. Please note, though, that it depends on what order you put the two distributions in the R code: if you put log-normal in first in the above compare distributions code, then the above would be reversed—those below 0.4 would favor power-laws, while above 0.6 would favor log normality. I may be wrong, but as far as I can tell it doesn’t actually matter which order you put the two distributions in, as long as you know which one went first and interpret it accordingly.

So, there you have it! Now you can run a power-law analysis on many types of data distributions to examine if you have a rich-get-richer dynamic occurring! Special thanks to Aaron Clauset for answering my questions when I originally began pursuing this research.

Full code at the end:

library(“poweRlaw”)

data(“moby”, package=”poweRlaw”)

pl_a <- displ$new(moby)

pl_a$getXmin()

est <- estimate_xmin(a)

pl_a$setXmin(est)

Pl_a$getPars()

estimate_pars(pl_a)

booty <- bootstrap_p(pl_a)

variables <- c(“p”, “gof”)

bootyout <- booty[variables]

#write.table(bootyout, file=”/Volumes/file.csv”, sep=’,’, append=F, row.names=FALSE, col.names=TRUE)

### Estimating a log normal fit

aa <- dislnorm$new(moby)

aa$setXmin(pl_a$getXmin())

est2 <-estimate_pars(aa)

aa$setPars(est2$pars)

comp <- compare_distributions(pl_a, aa)

comp

myvars <- c(“test_statistic”, “p_one_sided”, “p_two_sided”)

compout <- comp[myvars]

write.table(compout, file=”/Volumes/file2.csv”, sep=’,’, append=F, row.names=FALSE, col.names=TRUE)

pdf(file=paste(‘/Volumes/Power_Law.pdf’, sep=”),width=5.44, height = 3.5, bg=”white”, paper=”special”, family=”Helvetica”, pointsize=8)

par(mar=c(4.1,4.5,0.5,1.2))

par(oma=c(0,0,0,0))

plot(pts_a, col=’black’, log=’xy’, xlab=”, ylab=”, xlim=c(1,400), ylim=c(0.01,1))

lines(pl_a, col=2, lty=3, lwd=2, xlab=”, ylab=”)

lines(aa, col=3, lty=2, lwd=1)

legend(“bottomleft”, cex=1, xpd=T, ncol=1, lty=c(3,2), col=c(2,3), legend=c(“powerlaw fit”, “log normal fit”), lwd=1, yjust=0.5,xjust=0.5, bty=”n”)

text(x=70,y= 1,cex=1, pos=4, labels=paste(“Power law p-value: “,bootyout$p))

mtext(“All regions, Size”, side=1, line=3, cex=1.2)

mtext(“Relative frequencies”, side=2, line=3.2, cex=1.2)

legend=c(“powerlaw fit”, “log normal fit”)

box()

dev.off()